Activity 12: Basic Video Processing

September 24, 2012

After having to go through numerous techniques in processing images…. we are now to try and apply these to videos. Videos are known as a series of images of an event for certain time intervals. Hence, we have to find a way to convert our videos into a set of images and run our image processing techniques through loops to make things easier.

Follow the yellow brick road trail of blood

One method used in studying the rate of flow of water / liquids is through dye tracing.Some applications of dye tracing are: water flow and rate, leak detection, pollution dispersion, etc. [1]. Choosing the right type of dye for your specific study is essential as the color and type are factors on your data collection. Taking for example green dyes which are suggested to be used on murky brown waters as it gives the best contrast [1]. Taking videos of the dispersion of these dyes through the solution or samples is one of the methods in gathering data for these types of experiments.

For this reason, we tried to simulate this experiment in smaller scale. A video of the dispersion of a drop of a red food coloring dye 30ml of different temperatures of water (hot, cold and tap) placed on a petri dish was taken and this was image processed. The effect of the temperatures of the sample to the dispersion rate of a dye was investigated. A Canon EOS 550D camera was used as the video recording device. It is known to have 50 frames per second recording ability. The video was then processed in Avidemux to be converted in a .avi file as well as for cropping off unwanted portions. The .avi file was then exported as a series of images with the ‘frames per second’ decreased to 2.5 frames per second. I was able to decrease the fps value in the program VirtualDub and this was done because making use of the typical 50fps gave me 1500 images to be processed.From visual inspection, the the change in one image to another is not that significant and hence reduction of the fps is fine just as long as the growth is observable in a smooth transition. The animations (gif) of the dispersion for the different cases below are as displayed below:

What we basically have to do is to segment our image so that we’d end up with only the dyed portions of our videos (recall Activity 11). The area of the segmented regions was then determined by simply counting the pixels having the same threshold value as the edges were not that defined as what was done before (recall Activity 4). But since we are dealing with a series of images , looping through images can be done to save time and effort (recall Activity 10).

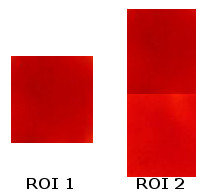

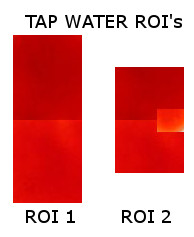

For the color segmentation portion, a region of interest (ROI) should be cropped off the image. Below are two ROI’s I have used:

The use of the 2nd ROI is necessary as after some time, the drop of dye disperses further and further resulting to a less concentrated color (lighter version). Since changing the ROI’s in a middle of a loop is complex, I instead opted to combine the lighter and darker versions. We are dealing with the mean and the standard deviation of the ROI hence ROI2 will give way into considering lighter shades as well.

Opening our images through loops is done through the use of the strcat() function. Below is the code I have used for the looping portion:

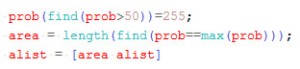

Making use of the code in Activity 11, it is not enough to simply count the pixels of a certain threshold value. The output of the segmentation resulted to pixels of differing intensity values and simply calling the maximum will give a small number(80 pixels only). I had to make use of the code below to resolve this issue:

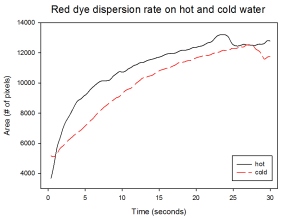

With my images sampled at a rate of 2.5 frames per second, I created a counter which would add 0.4 seconds for every image processed on my ‘time’ list. This gave me two lists (area and time) which lead me to the creation of the graphs below:

The best-fit trend was observed to be the exponential rise to a maximum as determined through the Sigmaplot plotting program. With the droplet being at its most concentrated state at the beginning, the dispersion is expected to be at its fastest rate. After some time, the dispersion slows down as there are less and less particles pushing their way through the sample.

As for the effect of the temperature of the sample to the dispersion rate, the plot shows that there is a difference between the hot and cold water samples. It was apparent that the dispersion was faster and that the area was larger for the case of the hot water. With higher temperatures signifying faster moving particles in the sample, it is then logical that the dispersion of the dye should be faster for the hot water sample. The dye particles are also being dispersed by the particles of the water itself rather than by the dye particles colliding with one another. On the other hand, lower temperatures signify slow moving particles which gives a logical reason to the slow dispersion rate as observed from our plots.

For some weird reasons, I had a hard time trying to get a nice dispersion plot for the tap water sample. With the use of the ROI2 I have used for both the hot and cold samples, I end up with a plot that suddenly decreases after some time which should not be the case! Looking at the progression of the image, the area tainted by the red dye still increases! As a solution, I had to include another cropped ROI on my current ROI as seen below:

This problem shows the need for the consistent background light for the segmentation to be a success. With the sunlight used as our light source, there will indeed be instances wherein the shades of our sample will vary.

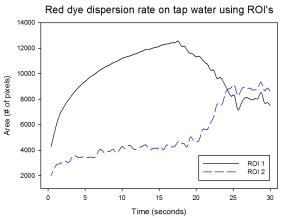

Making use of our ROI 1 and ROI 2 for our tap water sample, we end up with the plot as observed below:

The first ROI shows an exactly similar trend to that of our hot water sample for small values of t. This may indicate that the tap water temperature is still within the range where the dispersion rate is relatively fast. However, the sudden decrease in the area as detected by the ROI caused the inability to look at the rate of dispersion at later times. Making use of ROI 2 displays a different trend wherein the plot increases at an exponential trend. The sudden increases at later times shows how the values of the intensities of the pixels were much closer to our ROI (With the inclusion of the tinge of bright red)… However the downside is that at earlier times, only small amounts were segmented as the images were dominated by darker shades of red. This shows the limitations of this technique wherein the shade of the region of interest should be consistent for all times. A possible solution would be to vary the ROI’s for certain intervals so that we’d have accurate data for the area of the tainted sample.

As an additional portion of our activity, we tried looking at the difference between two colored dyes. Also, we have initially added a yellow dye on our sample so that our background has its own color. Through this, we are to investigate the effectivity of the segmentation of the desired regions despite a different background color of our sample.

Making use of the ROI2 for our red on yellow set of images while the green on yellow images’ ROI is as presented below:

We have determined a dispersion plot showing that there is indeed a difference between the two dyes:

Again, despite having a yellow dye mixed to our water sample.. the general trend for the red dye dispersion still replicates the results from the earlier portion of this activity. The green dye on the other hands shows how it disperses at an even higher rate (as based from the slope) after a certain period of time (t = 3 seconds). This shows the difference in how the dyes react to the sample. The concentration per drop for the different colors differ hence resulting to different dispersion rates.

Note: For this activity, I was able to enjoy and at the same time learn a lot of things. Hence I would like to give myself a grade of 11/10 for getting nice results and at the same time successfully applying all the techniques I have learned throughout this course. We were also able to look at not only the effect of the temperature of the sample but also the type of dye (concentration) used. Overall, this activity was for me the most fun as it acted as a little investigatory project.

References:

1. “Guide to Dye Tracers”, Professional Equipment. Available at http://www.professionalequipment.com/guide-to-dye-tracers/articles/

PS: As much as I’d like to type in more input… the internet here in our place is crappy that I had no choice but to rush in blogging about this.. Anyway, I plan to fix this blog up even though the deadline’s over XD

Activity 11: Color Image Segmentation

September 11, 2012

If from our previous activity we isolated certain features in the image through the simple thresholding and a little bit of morphological operations, this time… we are to try and isolate certain features of a specific color.

In drawing anime or sketching… a factor that makes these art pieces realistic is through its shading. There will be portions in an object where it’ll appear darker or brighter, depending on the location of your light sources.This is the reason why in taking an image of a 3D object, we won’t end up with a matrix having exactly the same values per R,G,B layers. Hence, the first step in color image segmentation would be to pick out the ‘Region of Interest (ROI)‘.

For this activity, I made use of two different images to be able to observe the effectiveness of the technique. The images are as seen below:

where my chosen ROI’s are the cropped portions as seen below:

Seeing that simply making use of the RGB values of our ROI’s would lead to the segmentation of only a small region, the determination of the rgI values is desired. This is so because the normalized chromaticity coordinates has a separate component for the color purity (chromaticity) and brightness of a shade. The conversion is done through the use of the equations below:

What you’ll do with these rgI coordinates depends on your preference. Two methods are available in color segmenting images: Parametric and non-parametric.

Parametric Segmentation

The main concept in the parametric segmentation is to calculate the probability that a pixel in the image is still within the range of the pixels from our region of interest. Taking the mean (through the mean() function) and standard deviations (stdev() function) of the r-g coordinates in our region of interest and making use of the Gaussian distribution with r and g coordinates of each pixel in the actual image:

The resulting product of the r and g Gaussian distributions (p(r)p(g)) yields our final image.

As for my spices image, I ended up with these two images:

It is clear that having a larger ROI results to more portions of the image of a specific shade to be included. This is so because we are considering a larger mean and standard deviation values.

On the other hand, the iced tea image with its corresponding ROI produced:

Seeing that we have used a small ROI as well as the gradient of the ‘light blue shade’ is very large… only the bottom portion of glass of iced tea was included.

Nevertheless, we have managed to segment our images! Cool right?

Non-parametric segmentation:

Unlike that of the parametric segmentation, this method won’t be making use of any distribution whatsoever. What we’ll basically do here is to look at the 2D histogram of the r-g coordinates of our ROI. We then make use of what we’ve learned from Activity 5: Enhancement by Histogram Manipulation. What makes things a tad bit more complex is that we now consider 2-Dimensional backprojection.

The 2D histogram of the r-g coordinates should give an output image that kind of resembles that of the NCC diagram when rotated. Below are the 2D histogram for each of the images I’ve used:

From inspection, we can clearly see that the coordinates correspond to the red region. Also, the difference in the shades of our two ROI’s is apparent here as the larger ROI gave out even more points in our histogram. On the other hand, the iced tea ROI gave out the histogram:

Since we have a very small ROI for our iced-tea image and through visual inspection it really appears as if it’s monochromatic, the resulting histogram appeared to be a single pixel! Nevertheless, for both cases… the location of the 1’s of our histograms was able to correspond to the colors of the ROI’s successfully.

Now taking note of the r-g coordinates of our original image and replacing their y-values with their corresponding y-values in the histogram of the ROI, we end up with the segmented images:

There is an evident difference between the end-products of the two methods. The non-parametric segmentation appeared to have considered more points as the iced tea image no longer have the ‘gradient’ effect at the center like that of the parametric segmented image. Again, this is due to the usage of a Gaussian distribution where indeed gradients should then be expected. Personally, I liked the parametric method much better. I like its effect to the image where it looks a bit more 3D as compared to the non-parametric image looking like a simply binary image.

Note: For this activity, I would give myself a grade of 10 😀 I’ve managed to do the activity and at the same time enjoyed it XD. I can’t wait to make use of this to our next activity (face recognition?) XD.

Random Blabbers: I remember when I was searching for an image to use, I was struggling as I’m very much in love with images of different colors… I had to find an image containing a significant amount of certain colors with a distinct feature. In the end I managed to enter my Photonics picnic album and it was at around 2am then. I seriously scanned through mouthwatering images of BBQ’s and shrimps that my tummy rumbled in annoyance ToT. Thankfully, there was this peaceful image of spices that I ended up with. Lesson learned? Never open an album full of food in the middle of the night XD

Can you feel my annoyance as well? 😀

Activity 10: Looping Through Images

September 2, 2012

The first part of the activity is to determine the average size (in pixels) of a desired shape scattered all over an image.

Starting of with our original image of scattered punched paper left-overs, I have divided the image into 9 equal sections through the use of the crop() function (which was discussed previously) in Scilab. As seen in my code below, there is an obvious pattern as to how the cropping thing works in Scilab:

However, whenever I try to append the individual matrices of the ‘cropped’ portions to a new list so that I can just call them one by one inside a for loop… they always end adding up which ultimately leads back to the original uncropped image! I had no choice but to individually save these images and call them whenever it’s their turn to be processed. Also… I know I can easily make a loop to call the divided images and process them.. However I chose not to as the images have different features on them. The end-product of a certain set of morphological functions may seem to fit image A but then it might not have that much effect on image B.

Anyway, going back to the activity proper…. All images were binarized through the use of the im2bw() function. What’s nice about this function was the presence of the threshold parameter. Varying the threshold enabled me to choose the value which gave the best output only having the circular patterns we want. Besides having to determine the threshold value through inspection, the use of the histogram could be done as displayed below:

The next step in finding the average size of these circles is to try and separate them. This is where the powerful and oh-so-useful morphological functions play. Using different combinations of the ErodeImage() and OpenImage() functions with different Structural Elements, I ended up cleaning the image a little bit. HOWEVER, there were some very persistent circular patterns which I could not separate no matter what type of morphological functions as well as structural elements I’ve used. Opening and Eroding the image too much causes significant loss to some of the already separated circles. Hence, I just decided to leave them as is and dealt with them later on.

Having cleaned up our image, we can now separate the circles not only physically but also through labels. How do we do that? I have already introduced the bwlabel() function available in SIP in my previous activity… However, I have found that SearchBlobs() function more useful as the IPD toolbox has a lot of functions that makes the whole thing easier :). The only tricky thing in dealing with the blob functions in IPD is that the output of these functions are changed into different types (uint 32). Simply calling the outputs would lead to an error. Thanks to Dr. Eng. (J) Harald Galda, 2011’s tutorial PDF available in here, I have finally found a way to display their outputs which is through the use of the ShowImage() function and the conversion of the output to uint8(). A test to see how much ‘blobs’ were detected by the function is by typing in max(BlobImage), where BlobImage is the output of the SearchBlobs() function.

As I’ve said earlier, there are a lot of blob functions useful in the IPD toolbox. One is the FilterBySize() functions. What this does is that it removes the ‘blobs’ having certain areas smaller than what you’ve indicated. This function asks for two parameters: (a) the output matrix of your SearchBlobs, (b) the threshold area. This enabled me to remove more unwated blob leaving me with only the blobs pertaining to the circles. This is observed by yet again using the max(Filtered) function:

max(BlobImage) gives out 15 while max(Filtered) gives out only 10!!

As an additional note, the jetcolormap() was used to show how each blob was recognized. The different shades of colors simply shows that the respective blobs is considered as a single entity.

Now that we have successfully labeled the respective circles, it is now time to find their areas! Using the code I have used in the note-recognition portion from the “sing me a song” activity… I am able to easily get the respective number of pixels contained in each circle. But then you may have noticed that certain blobs are actually composed of two or more circles that I have failed to separate earlier… The only solution I could think of is through the use of the if functions as seen in the code below:

Take note that the code above is not the final code I have used… I varied the conditions in the if function depending on the image I’m dealing with..

As a result, I end up with the following table:

Taking the average of these values gives me the average area of each circles to be 424 pixels with a standard deviation of 81 pixels.

The second part of this activity tests how we are able to manipulate images from the things we’ve learned so far. Given a new image of scattered punched-paper left-overs only this time there are overly large cut outs, we are to detect these cancer cells!

Again, we start off by binarizing the image through the im2bw() function with the corresponding threshold value. The next thing is to make use of different morphological functions again so that we can separate the circles that appear to be overlayed. I did everything that I can to end up with the image below:

Even though we can now clearly see the cancer cells we so want to detect…it is noticeable that there are still large clusters in our already processed image! Using the SearchBlobs() function and again the FilterBySize() function with the corresponding threshold value chosen by inspection, I am now left with this:

I am still left with two unwanted blobs! Who would want to pretend that they’re cancer cells right?! Varying the threshold value I was using for the FilterBySize() function already removes a cancer cell that apparently is smaller than an unwanted blob. I could no longer find a way to remove these unwanted blobs further…

However, playing around with Scilab me to the discovery that I can actually convert this to a matrix with each of the blobs having their own respective grayscale values. Luckily, the cancer cells have values lower than the two unwanted blobs! This then enabled me to remove them by simply using the find function as well as specific conditions (only those of intensities lower than 80% of the maximum of the matrix and greater than 0 will be included).

I know it’s a bit messy but it was able to give me this image:

FINALLY!!

Note: For this activity, I would like to give myself a rating of 10 as I have managed to do all the requirements. I bet this activity would’ve been fun to do but due to the load I currently have (with the upcoming SPP deadline and papers and midterms and problem sets all due at the same time)… I had to rush into finishing this activity sooner. I know I could’ve explored on the things the blob functions in the IPD toolbox can offer but having my help files in Japanese… I had to do my own tricks for certain portions of this activity.

Activity 9: Playing Notes by Image Processing

August 22, 2012

If previously we had to deal with the handwritten notes, this time we would be dealing with another type of notes.

I have always been fond of music and have considered it to be essential in life. Just like any other languages, music has the ability to make you understand a message by not only its lyrics but also based on the tone of the music. Music can be produced through vibrations of materials and the notes vary by the frequency of these vibrations.

With these concept, Scilab is able to play music for us! Shocking right? It simply requires the frequency of the notes you want to play as well as the duration of the note. Making use of the note() function which asks for these two parameters and placing all notes inside a list []… the sound() function does the singing for you!

The Sound of Music:

Before anything else, a musical sheet is needed. It was instructed that a simple musical sheet is best used first so as to keep things simple.. However, since most of my classmates are starting of with the most basic “Twinkle twinkle little star”,I instead opted to use of my favorite songs by the Beatles 🙂

All My Loving – Beatles

I’ve divided this musical sheet into three rows respectively. Each row will be image processed respectively so that I’d end up determining the corresponding notes from this musical sheet. Calling these rows in Scilab, the lines can be easily removed by making use of the im2bw() function where the threshold value is set to the point where we’d only end up with something like this:

Notice that I have cropped the image where the left most portion of the musical sheet is removed as this is not essential for our case. A cropping function is available in Scilab: imcrop(x,y,W,H) where the x and y values are the coordinates of the point where you’d like to start cropping and the W,H the width and height of the portion which you wish you to leave.

Now since more or less we are left with the marks that we want… we only have to deal with a few more. I have tried using morphological functions however I would always end up erasing some essential portions in from my notes. The only solution I could come up with was to mask these unwanted portions. I eventually resorted to this method as my chosen musical piece is a bit complicated. If I have only chosen a simpler model, I’m pretty sure that I’d end up instantly getting a clean image after just a few steps :).

Anyway, the masking procedure is done by creating masks first. These masks are black on white patterns where the black portions stand for the locations of the pixels you wish to no longer appear on our image. Using the image above as our reference, the corresponding masks I have made are as follows:

Calling this in Scilab and multiplying this to our image so far.. the unwanted points will be multiplied to zero eventually leaving us with only the points we desire.

Now at this point, we only have the most essential portions from our musical note. We can clearly distinguish which notes are which. From the first row, the nine quarter notes are seen as solid white blobs whereas the two half notes seem like incomplete white blobs. The same goes with the second row only this time there is only one half note and twelve quarter notes. Lastly, the last row shows seven white blobs which stand for our quarter notes while the single whole note looked like two smaller blobs a significant distance apart. Still, this is different from our half notes where the half notes’ distance is smaller. This then is enough to distinguish one note from the other and hence gives way for us to move on to the next portion of our activity.

The code that I used in identifying the respective type of note (duration) of each blob is available below:

Code I have used for rows 1 and 2. Row 3 will simply replace t3 as we are now dealing with whole notes instead.

This makes use of the functions from the SIP toolbox (as I still have yet to figure out how the Blob functions work in the IPD toolbox). From our final images processed above, we run this through the bwlabel() function. This function labels the ‘connected’ blobs separating each and one of them. The L output is actually the matrix of our image wherein the blobs are relabeled (one blob will all have values equal to one, the next will have a value equal to 2 etc.). The n output of the bwlabel function on the other hand gives out the total number of blobs the bwlabel distinguished. Thanks to a site, I figured out that the bwlabel function in SIP actually scans the image for the blobs from top to bottom. For our case, we hope to scan it from left to right instead. Hence, I had to use the transpose technique seen in my code. The rest of the code basically instructs that if the area of the blobs have a specific value, it will have a corresponding note type (or duration). I have done this as the difference in the area of the blobs are obviously significant for our different note types.

The determination as to which frequency these notes have is the next objective in finally making Scilab sing my song. From the results that we have, I basically had to do morphological operations to end up with only single pixels as a result for each note. With this, the code I have used in localizing is available below:

What the code basically does is that it finds the location of all the single points we have. I have also found out that the matrix in Scilab is indexed in this manner:

hence.. I made use of the modulo function that outputs the remainder of a number if divided by another number. The other number I used is the height of the image we are currently dealing with while the number to be divided is the index from the find function. Through this method, I get the location of the specific note with respect to the vertical axis. This enables me to set ranges for which certain distances from the top is indicated.

With all these.. I have compiled the results from the three rows giving rise to the music below:

Take note that the singing in scilab makes use of the following code as provided by Mam Jing Soriano.

function n = note(f, t)

n = sin (2*%pi*f*t);

endfunction;S = [note(typeofnote, duration)….];

sound(S);

know it didn’t exactly sound like the original but blame it to the fact that this musical sheet required the use of complex symbols. Also, rests in between certain sets of points are required as well for our case to ultimately duplicate the music of the Beatles. Nevertheless, if this was done to a much simple musical piece, there is no doubt my code would be able to sing this properly 😀

For this activity… even though I was unable to really replicate the sound of the Beatles’ All My Loving.. I would like to give myself still a grade of 10. This was based from the fact that I was able to accomplish the objective of this activity which is to find a way for Scilab to read and sing musical sheets. I have made use of different techniques coming from different toolboxes from Scilab and through these I have managed to learn a few more things such as the bwlabel functions. Also I was pretty excited by the fact that I was able to localize the points of the notes through only the use of the find and the modulo functions! So I think with all that I did I deserve this grade 🙂

Activity 8: Preprocessing Text

August 22, 2012

From all that we’ve learned so far, we are now to try and test our CSI/Detective/Stalking skills. The next three activities requires the use of the different techniques learned, especially the morphological operations, to manipulate the images in Scilab.

In preparation for the said activities, I had to ensure that different toolboxes in my Scilab are functioning well:

- SIVP in Scilab 5.3.3 – the installation process of this toolbox is very straightforward and has been discussed in the earlier chapters.

- SIP in Scilab 4.1.2– with the SIP toolbox being a bit moody back in my Ubuntu operating system (as discussed earlier), I was forced to install a lower version of Scilab in my Windows OS and eventually put in SIP there. The installation of this toolbox is straightforward HOWEVER upon choosing the SIP toolbox…. you’d get loads of errors!! Thanks to Ms. Charm Gawaran’s hint… this can be easily resolved by typing in the code:

chdir(ImageMagickPath);

link(‘CORE_RL_magick_.dll’);to the client and then clicking the SIP toolbox again will finally properly load SIP 😀

- IPD in Scilab 5.3.3 – As suggested by Mam Jing Soriano, we can download the IPD toolbox through here. Unzipping the file and placing the folder inside the Scilab 5.3.3 –> contrib folders, the installation can only be completed upon typing in the following command in the client.

atomsInstall(‘IPD’)

This toolbox will prove its purpose as there are a lot of morphological operations (and other image processing functions) available here. In fact, this toolbox was the one I most used for this activity.

So now that we are fully equipped with all the functions that we need. Let us now proceed to our mission for this episode 🙂

Stealing Signatures:

I know it’s really a bad idea to try and steal someone’s signature but…. I find this title fitting for this activity. Why? Well.. we are given a scanned document as seen below this paragraph. Making use of only Scilab, we have to figure out a way to retrieve the handwritten words of a portion of this document and have it cleaned up ending with a clear, binary image of our chosen words.

However, it was indicated that the use of other image editing devices (such as Photoshop or GIMP) is allowed only for cropping your desired portion as well as re-aligning the image so that the lines coming from the table is not tilted. The alignment of these lines will play an important role later on…

However, it was indicated that the use of other image editing devices (such as Photoshop or GIMP) is allowed only for cropping your desired portion as well as re-aligning the image so that the lines coming from the table is not tilted. The alignment of these lines will play an important role later on…

Taking notice that this image is actually a Truecolor type of image, we expect to have RGB layers upon calling this in Scilab. Instead of immediately converting the image to grayscale or black and white, I looked at the different layers of the RGB image and compared them to one another first. This was done because it was noticeable that the handwritten words are not exactly colored black but rather blue. I was hoping thatupon looking at the “blue” layer, the handwritten would have the highest intensity and hence can be easily detached from the rest of the image. However, the images below seem to tell another story…

Since what I was hoping to find was not apparent (and in fact the blue layer had the smallest intensity among RGB layers!), I just had to make the most out of my effort. From here, I picked the layer which gave the highest contrast. This was done so that I can easily eliminate the noise coming from the paper (it’s texture or small particles) hence leaving us with only the handwritten words and the horizontal lines. I compared the red layer to the binarized version of the original image as well as its’ grayscale to see which image produced the highest contrast. It was still the red layer that gave out the image with the highest contrast so this was used for the next steps of my image manipulation.

The next thing I did was to try and remove the horizontal lines off our image. This can be done by investigating the Fourier transform of the image. From the previous activities, repetitive patterns will appear as distinct patterns in Fourier space. Through this, we can easily determine which points in the Fourier space are the ones responsible for the horizontal lines. This can be removed by making use of a mask that removes the said patterns off the Fourier transform of our original image. The results are presented below:

The code used is available below where I is the matrix of the red layer of our original RGB image and F the filter used to remove the unwanted patterns off the Fourier transform.

In addition to the FFT of the image, I had to properly scale the resulting inverse Fourier through the code seen in lines 25-26. The intensity of the resulting image was easily varied by simply multiplying this to a constant (which for my case was 3) so that I’d end up with the contrast that I wanted. With Mam Soriano’s reminder that the image should be white on black, we “inverse” the image by using the imcomplement() function. And finally, we binarize the resulting image with the threshold that best fits our taste (0.55 for my case).

What’s our end-product so far?

From the looks of it, the image no longer has the horizontal parallel lines coming from the table. However, due to its removal… some points that belonged to the words were removed as well. Also, there are still noise visible. The only solution to this is to make use of different morphological operations :).

Besides the typical erode and dilate operations we have learned from the previous activity… the scilab IPD toolbox presents other morphological functions such as close and image (which basically is a combination of dilate and erode). I have also learned from this portion of the activity the importance of the structural elements we make use of. Thankfully, the IPD toolbox has the CreateStructureElement() function which asks for two inputs. The first input is the type of structural element you wish to have. There are already built in codes for the typical shapes such as square, circle rectangle, etc. If on the other hand you wish to make use of your own structural element, the term ‘custom’ is for you. The second input in this function asks for the size of the structural element you wish to have. If for the case you make use ‘custom’, the second input directs to the matrix of your ideal structural element containing boolean terms %T and %F (instead of the usual 1’s and 0’s).

As much as I’d like to show the different structural elements that I have used, the imshow function does not work alongside the output of the CreateStructureElement() function. Hence I would just be listing down the code and leave it to your imagination 🙂

A. Eroded the current image we have so far with its’ structuring element a rectangle of dimension [1, 3].

B. Dilated the image (A) with structuring element a rectangle of dimension [2,1].

C. Dilated the image (B) with structuring element following the matrix [%F %T; %T %F]).

D. Dilated the image (C) with the structuring element a rectangle of dimension [3,1].

What exactly was I doing? Well.. I wanted to find a way for the gaps from the removal of the horizontal lines be brought back through morphological functions. I had to constantly dilate the image in a way that it closes the gaps BUT the letters from the note is still distinguishable. This was the best combinations I could get… and sadly it is not looking good so far. I could’ve stopped at point B or C however the gap still exists! In fact, A is good enough for me already but the gap was very very persistent.

Wanting to end up with only single pixel thick letters, I had to shift to my Scilab 4.1.2 to make use of the thin() and skel() morphological functions available in the SIP toolbox. The results are as follows:

The skel function gave the outline of the letters which I think has words that are readble (such as CABLE). On other hand, the thin function takes the central points of your shapes. Due to the intense dilation from my previous steps, I ended up different squiggly lines that looked as if certain words were connected to one another. The word “CABLE” on the lower right has only the letters C and a to be readable.

I really tried my best in finding the right combinations but I somehow ended up with unreadable images still! The best image that I got came from the images with the gaps… In fact I tried thinning point A as I find this image readable for me and ended up with:

were I think the word Cable as well as the B from the “USB” was readable. I tried close the gaps through another set of morphological function but I ended up ruining the image. This then gives me the best image I can post process…

Personal Note:

Hence, with my resulting image a bit tacky and not being able to end up with all the words being readable… I would give myself a grade of only 8. Though I think I should give props to myself as the portion I have chosen to post process was actually one of the hardest. It dealt with handwritten cursive font letters as well as the whole portion embedded with horizontal lines. But still, I was pretty much disappointed with my results. Every morphological operation I did has its advantages as well as its disadvantages. I tried to cleverly think of new structuring elements but I always end up messing the image even more. 😦

Activity 7: Morphological Operations

August 2, 2012

In preparation for an extreme ultra-CSI episode that’ll be up next after this activity, we have to familiarize ourselves with another technique in image processing that will be handy in future use.

The word Morphology already gives you a faint idea that it deals with shapes and structures. Morphological operations are commonly used on images to enhance, isolate, and improve its details. However, these operations are carried out only on binary images with its elements lying on a 2-dimensional space.

A quick review of the set-theory is needed as this serves as the main rules of our operations. The image below will serve as our reference point. The following elements can be seen:

Set A – pink box, Set B – purple box, C – region within the green blue outline, Set D – yellow box, and the elements butterfly and running man.

- The butterfly is an element of Set A whereas the running man is not.

- The complement of D are all the elements outside it (Set A, butterfly, doorman and the whole region of B that is not within D).

- Set D is a subset of Set B.

- C is the intersection of Sets A and B.

- The union of Set A and B is the whole pink and purple regions together.

- The difference of Set A and B is the whole pink region excluding those within the blue green outline.

In addition, translation is simply the shifting of the element to another point.

For the morphological operations to work, two images or sets are required. The main pattern will serve as the image we hope to increase/decrease its size. The structuring element on the other hand will serve as our basis on how much will the original pattern change and as to how it will change.

Dilation – Super Size Me!

With your structuring element having a set origin (where this is where we will be placing an element if certain cases are met), this is translated to every point of the main pattern. The points at which at least a single element of your structuring element intersects with your main pattern, the origin will turn into an element of the dilated set.

A larger structuring element will then result to a larger output. If in case a single pixel is considered as your structuring element, we will end up with the exact image as our main pattern.

Erode – The Biggest Loser!

As for the case of the erode operation, the resulting image will be smaller as compared to the original image. This is because this operation considers the points at which your structuring element fit inside your main pattern.

——-

To start of with the activity, we first have to determine the images we want to apply the operations. As indicated earlier, there should be two inputs involved: the main pattern by which we will be eroding / dilating and the structuring element which will be the basis as to how much will the original pattern be eroded/dilated.

For the main pattern, four different shapes were used as drawn below:

(L-R): A 5×5 square, a triangle with 4 units as its base and 3 units as its height, a cross that has a length of 5 units for each strip and lastly a hollow 10×10 square that has a width of 2 units.

The structuring elements on the other hand are as shown below:

Going old-school:

Before taking advantage of the gifts of technology, we are to test if we have really understood the concepts behind the dilate and erode morphological operations by going old-school. Through the use of a graphing paper and a marker, the resulting shape as our main patterns are eroded for a chosen structuring element is predicted and drawn.

Starting with erode, we are to expect that the shapes would be smaller as compared to the original pattern. The matrix of images below shows that I have managed to get shapes that are indeed smaller. In fact, there are instances wherein the original pattern is totally removed! This is expected for certain shapes as the erode operation deals with points at which your structuring element perfectly fits the main pattern. Take for example the case of cross main pattern where the 2×2 box structuring element is impossible to embed as the width of the cross is only 1 unit! Hence, the resulting eroded shape is nothing.

In contrast, dilating the shape results to a relatively larger pattern which I have managed to observe from my predictions. With the dilate function considering the points at which the structuring element still overlaps on the main pattern even if the overlap is only a single pixel.

Consistency please?

Knowing what we are to expect upon dilating and eroding all our patterns and structuring elements together, it’s time to find out if my predictions are correct. The operations can be carried out in Scilab as readily available functions for morphological operations are present in the SIP and IPD toolbox.

With the sudden death of my Ubuntu system, I have finally accepted defeat and opted to download the Scilab 4.1.2.. This version of Scilab matches the last version of the SIP toolbox amidst having lots of flaws such as being unable to run .sce’s so I usually end up typing my codes in notepad and pasting them on the prompt.

Through the use of Photoshop, I recreated the main patterns as well as the structuring elements as observed below:

The important thing to take note in creating these patterns is the consistency of the scale of your units. Initially, I downloaded a graphing paper page from the internet and used this as the basis in creating my patterns. However, upon eroding and dilating them, the results produced images that seemed to not fit the units that I have! I had to redo my patterns again using 3×3 pixels as a single unit.

Now after calling all our patterns into scilab through the imread() function, it is best to ensure that the matrix of your patterns is strictly binary and not in grayscale (afterall, we are talking about binary operations!). This can be easily done by the im2bw() function with my threshold point set to be at 0.7.

The erode() function calls for two inputs: the matrix of the image you wish to erode and the structuring element. Using this through all our patterns gives rise to the matrix of images below:

Comparing this matrix to my matrix of images of old-school eroded patterns, I am glad to say that they are exactly the same! The only difference between the two are their scaling.

Similarly, the matrix of image for the dilated images show that the digitally dilated images as well as my predictions are the same.

Personal Note:

Despite getting the right results from my predictions, it took quite awhile before I managed to fully understand and master the art of eroding and dilating. I had the hardest time taking to heart the process of dilation and it was all thanks to Mr. Xavier Tarcelo that I finally figured it out through the use of an acetate in which I had to move box per box on my graphing paper. For this activity, I would like to give myself a grade of 10/10 as I have managed to accomplish all the requirements in the activity as well as understand the techniques and concepts.

References:

1. Soriano, Jing. Applied Physics 186 Activity 7 – Morphological Operations. NIP, UP Diliman. 2012.

Activity 6: Enhancement in the Frequency Domain

July 25, 2012

Taking our stalking/detective/CSI-ing skills to the next level, we now try to post-process images in trying to enhance or remove certain portions without completely destroying the details of our image. This is beyond the abilities of any image processing programs such as GIMP and even Adobe Photoshop (as far as I know)! So this means that what we’ll be doing is something not everyone knows.

Why the need? Well… let’s say you want to try and analyze a certain letter from a kidnapper but then they tried their best in trying to erase it through scratches. Fear not for these scratches can be removed by proper filtering. How? We have to deal with the Fourier transform of images to be able to see these scratches in a new light. But before going further, we first have to familiarize ourselves with the most basic of things in the FFT world:

Familiarization with the FFT of Common Shapes:

Before going through the complicated images we hope to clean/filter/go-detective-with…. we first have to familiarize ourselves with the FFT’s of common shapes. Why is this necessary? Well, if we are now dealing with images that have ‘patterns’ on them, knowing the common FFT’s of certain shapes and patterns will immediately aid us in giving an idea as to what type of filter we should be using.

It took awhile before I finally mastered the syntax in Scilab for the different FFT applications. Below is the code I’ve used in getting the FFT of a certain pattern/image (represented by the variable m). For some odd reason, the use of the fft() and fft2() functions did not give out the desired results. Thanks to Mam Jing Soriano for figuring out that the working function for our Scilab 5.3.3 and SIVP toolbox is through the multidimensional FFT or mfft() function. I mean honestly… the matrices of our patterns and image are 2D right? So I really can’t comprehend why the fft2() function won’t work.. Anyway, the mfft() function asks for atleast 3 parameters: matrix of your object you wish to take its FFT, -1 for FFT or 1 for inverst FFT and lastly the dimensions of your object. After taking the fft of the object, immediately displaying this won’t give you a nice output. We still have to make use of the fftshift() function so that we’d get resulting patterns centered at (0,0) AND also have to take its abs() as the output will be complex!

In Scilab, the command imshow(FTmcA) will not display the output we are looking for. Again, I’m pretty much puzzled as to why complications exist in Scilab…. Again, Mam Jing Soriano was able to figure out that grayplot() function can give out the proper display of our fft’s. PHEW!

The first pattern deals with two dirac delta functions placed symmetrically along the x-axis. Basically this’ll look like two dots along the x-axis. Constructing the said pattern through the code as seen below:

gives an FFT that looks like the repetitive strips parallel the x-axis and continuously moving along the y-axis.

Through this, the FFT of two dots placed symmetrically along the y-axis will have stripes that are parallel to the y-axis.

Increasing the diameter of our ‘Dirac delta’s’, the next pattern deals with two circles. Again, this pattern was generated through the code:

which we have been using since Day 2 (this just proves that what we learn from the past can always be handy in the future 🙂 ). However, I’ve learned that this method does not necessarily give out perfect circles. You can actually see that they appear to have ‘corners’ …

The output looks like an Airy function (the bright circle that eventually fades as it propagates) with sine waves embedded in them (the black stripes). Take note that if we instead have a single circle, the FFT will look like an Airy function without the additional sine waves in it. Besides simply looking at the FFT of the circles, it was also observed that diameter of the central bright spot is inversely proportional to the diameter of the size of the circle.

If instead we consider squares brought by the code below:

The FFT looks a sinc function propagating along the x and y axis.

Similar to the case of the circles, it was observed that upon increasing the size of the squares, the size of the central bright fringe appear to be decreasing as well.

Not far from the circular patterns used earlier, the next pattern dealt with two Gaussians. Gaussians are commonly used as they resemble actual point sources in real life. The construction of the Gaussian pattern was done through the use of the fspecial() function (which I’ve managed to make use from my earlier activities already). The resulting Gaussian patterns and their corresponding FFT’s are displayed below:

Since we have a pattern that kind of looks like the circles, we’d expect the resulting FFT to be like that of the Airy function. Only this time because the edges of the Gaussian is a gradient that eventually goes to 0 intensity, the outer circular rings beyond the central bright circle was already negligible as its’ intensities immediately decrease. It was also observed that since we have two Gaussians in our pattern, the Airy function has “sine waves” embedded on them as well. Also, as the size of the diameter of the Gaussian was increased (through increasing the value of sigma in our equation), the diameter of the central circular pattern in its FFT decreases.

Now that we look at it, the FFT’s are actually the diffraction patterns of a laser as it passes through a certain shaped-aperture! In fact, there’s an equation that gives the resulting electric field of a light beam upon passing through an aperture.. Of course, that’s beyond the scope of our activity already though :).

As a last test, the pattern now deals with evenly spaced 1’s along the x and y-axis. This was generated through the code:

This is of interest as the resulting FFT looks something like a weave…

Recall that the FFT of two points along the x-axis looks like stripes propagating through the y-axis. Now that we have more than two points and add the fact that they are evenly spaced AND that we also have the similar points placed along the y-axis, evenly spaced weaves looks reasonable enough as its FFT.

Why was this taken? Well… this looks like a pattern we’d usually encounter with our things right? Close-up version of your jeans, baskets, etc. We might just find this useful later on.

Honey-Stars Special:

Another thing we’d have to familiarize ourselves with is the concept of convolution. Convolution is the method by which the Fourier transform of two functions are multiplied to one another. Graphically, convolution indicates the area of the overlap of the two functions we are trying to convolve. This is of importance as this has a lot of applications in different field like electrical engineering, optics and of course image processing [1].

Now the two images we’d try to convolve are as observed below:

The first one consist of 10 points of 1’s that are randomly placed in our image. On the other hand, the other one consist of a single star shape in the middle. Reminds you of honey stars right? (PS: I was really hungry at the time I was doing this part of the activity… I seriously had to prevent myself from using a pizza as my patter 🙂 ).

Now taking the respective FFT of both images and making use of the per-element multiplication function (.*) in Scilab gives us our result. However, since we hope to see the resulting image, we have to take the inverse Fourier of the result. As discussed earlier, the use of the ifft does not seem to work hence the use of the mfft() plays at hand yet again. This time though we set the mode to 1 so that it’ll take the inverse Fourier instead.

What’s your guess for our resulting image? Remember that the convolution more or less will look like that of the first image BUT with the second image ‘translated’ in it. So theoretically the ten 1’s will be replaced with that of our ‘star’ pattern.

With that in mind, we get… not only one but TEN scattered honey stars! YUM YUM!

What happens if in case the pattern used have larger dimensions than that of our honey stars? We’d still get the same results though this time the patterns at times might overlap with one another.

Familiarizing ourselves with this technique can be of purpose when we have to implement the filters to our original images as you’ll see later on.

CSI NIP: Episode 1 – Lunar Analysis

It’s a common practice in Astrophotography and landscape photography to make use of a technique termed as “stitching”. Like that in sewing, stitching is the process when two or more images are merged resulting to a single image. This technique is used for cases wherein the object is beyond the limit of the lens of the camera. Panoramic images which gives us a wide view of landscapes and scenes are results of stitched images. However, one of the main problems of this technique is the parallax error brought by the instability of your camera or even just the simple change in lighting of your view.

It’s a common practice in Astrophotography and landscape photography to make use of a technique termed as “stitching”. Like that in sewing, stitching is the process when two or more images are merged resulting to a single image. This technique is used for cases wherein the object is beyond the limit of the lens of the camera. Panoramic images which gives us a wide view of landscapes and scenes are results of stitched images. However, one of the main problems of this technique is the parallax error brought by the instability of your camera or even just the simple change in lighting of your view.

For the first episode of our going-detective-with-Scilab, we will try to remedy the error brought by stitched images of a lunar orbiter image taken by NASA. It is clear that the image contains the edges of the ‘stitches’ giving rise to repetitive patterns parallel to the x-axis.

Hmm…. Why does this look so familiar? Taking the FFT of the image shows us why…

From earlier, we have found that the FFT of the pattern containing evenly spaced 1’s along the x and y-axis is a weave. As for this case, it is apparent that the FFT of the repetitive pattern brought by the stitches is simply equal to evenly spaced 1’s along the y-axis! This is the reason as to why FFT’s play an important role in different applications. It’s ability to realize the frequency of the repetitive patterns gives way in enhancing and even removing them.

So now that we know the points in the FFT that’s giving rise to the patterned stitches in our original image, we now create a mask to filter these out. The mask in theory should be composed of 1’s and o’s. If we hope to remove the points along the y-axis, we have to ensure that our mask will have 0 values on these points while the rest of the values will be one. Saving the fft of the image and placing it in Adobe Photoshop, I was able to create a mask that looks something like this:

In order to apply this mask to our FFT, we make use of the powers of convolution. Convolving the unshifted FFT of the original image to that of the fftshifted mask, we get the filtered FFT. However, since we hope to see its effect to our image, we again make use of the mfft(image,1…) function to take the inverse Fourier.

Our result?

Woah! The stitch marks are barely visible!!

If in case you hope to get an even better image, a construction of a better mask can be done. Also, the code I have used for this portion of the activity is placed below for future reference:

CSI NIP: Episode 2 – Brush Strokes

For the last part of this activity, we are now to deal with an image of a painting on a canvas.

This image is a portion of Dr. Vincent Dara’s painting entitled “Frederiksborg”. Through the techniques used earlier, we hope to take out the weave pattern from the canvas so that we’d end up with only the painting. Removing the pattern of the canvas gives us only the details coming from the painting and hence can aid in telling us the strokes used by the painter.

Making use of our program to look at the FFT of this image gives us:

The FFT obviously contains what looks like alternating points. We then assume that these points are the ones that gave way to the pattern brought by the canvas. A filter was yet again created by simply taking note of the locations of these bright spots:

Upon convolving the fftshifted version of this filter to the unshifted fft of the image and taking its inverse FFT finally gives us our filtered image.

Yet again, we were able to take out bulk of the weave-pattern giving us a clear view of the brushstrokes done by the painter. If this is still lacking to your preference, creation of a more accurate filter can be done.

As an additional investigation, we try to look at the fft of the filter. Theoretically, this is supposed to give us the weave-pattern we hope to remove from our original image painting. The resulting fft is as observed.

It does resemble the weave patterns we are seeing however it seems as if it’s still lacking at some point. This simply tells use that the filter used could be further improved.

References:

1. Wikipedia. Convolution. Available at http://en.wikipedia.org/wiki/Convolution#Applications. Accessed July 25, 2012.

2. Maricor Soriano. AP186 Activity 7: Enhancement in the Frequency Domain. 2012.

Personal Note:

For this activity, I’d like to give myself a rating of 10/10 as I was able to successfully do all the requirements. This activity actually got me frustrated as a lot of functions actually don’t work the way they were supposed to. The ifft() function was, for some unknown reason, giving out a different output. In fact, due to my tinkering with the functions, I was able to show the fft’s through the imshow function. How? I had to simply use the ifft() function to the abs() of the fft then take its real parts… I really don’t know how it worked but it gave me the chance to save the images of the fft properly 🙂 Funny right? That’s indeed the life of a scientist… trying to figure out something and then you’d end up with something new..

Activity 5: Enhancement by Histogram Manipulation

July 10, 2012

In the art of photography, light can be considered as either your friend or enemy. A friend as it serves as the illumination of your test subjects which brings your images to life while sadly an enemy as it’s high dependence on light can be a continuous burden to you. Based from my experiences thought, it is far better to have a highly intense light source as the settings in a camera can easily be tweaked to reduce the amount of light entering your optical system. On the other side, there are limited solutions when there is a lack of light. Sometimes even making use of a ‘flash’ still gives us dark images.

So how are we to solve this? Through post-processing of course!

This activity concentrates on the technique in manipulating the histogram in order to adjust the brightness of your image. Take for example the image below:

In order to manipulate the pixels of this image, we first have to convert the image in grayscale as not doing so will further complicate the process. As learned from the previous techniques, the conversion to grayscale as well as displaying the histogram is done by the code snippet below:

Which gives rise to the images below:

It is important to take note here that the grayscale plot of an image is actually called as the graylevel probability distribution function (PDF) once the plot is normalized to the total number of pixels [1]. One can clearly observe that the image is indeed dark as most of the pixels are located on the left portion to the graph which is close to 0 (signifying the darkest pixel).

A more interesting quantity we would be using for this activity is called the cumulative distribution function or CDF. From Wikipedia, a CDF is the area of the the PDF after some time. Simply put, the CDF shows the evolution of the total area of your PDF. This can be taken in Scilab through the use of the cumsum() function as already shown in the code snippet above. With our PDF illustrated above, its corresponding CDF is as seen in the image beside this paragraph.

A more interesting quantity we would be using for this activity is called the cumulative distribution function or CDF. From Wikipedia, a CDF is the area of the the PDF after some time. Simply put, the CDF shows the evolution of the total area of your PDF. This can be taken in Scilab through the use of the cumsum() function as already shown in the code snippet above. With our PDF illustrated above, its corresponding CDF is as seen in the image beside this paragraph.

The manipulation of the histogram is highly dependent on the shape of our CDF. Hence, we would investigate on the effect of the shape of the CDF to our histogram and eventually the output image.

The new CDFs we hope to implement on our image are as illustrated below:

These CDFs were produced through the use of the code snippet below.

Both the linear and quadratic cases were done through simply defining the x2 to be a list starting from 1 to 255. On the other hand, the Gaussian CDF was produced by first plotting a Gaussian curve followed by the cumsum() function. It is also important that since our original CDF is normalized (ranging only from 0 to 1), the last line of the code normalizes our ideal CDFs.

Now having our ideal CDF and the original CDF, the implementation of the ideal CDF is next. This is done through a process called “backprojection”. The steps through this method is listed as follows:

- For each x-values of the original CDF (take note that this ranges from 1 to 255 signifying your pixel intensities),

- It’s corresponding y-value was noted.

- The y-value noted for a specific x-value of the original CDF was then traced to the ideal CDF. We try to find the nearest y-value present in our original CDF

- then we take note of its corresponding x-value in our ideal CDF.

References:

[1] Soriano, Jing. Activity 5 – Enhancement by Histrogram Manipulation manual. Applied Physics 186. National Institute of Physics, UPD.

After finally getting everything fixed and prepped for the activity proper (Did you read my previous post that served as this blog’s prequel? Well if you didn’t… YOU SHOULD by clicking here), I can now finally discuss to you the actual activity:

Have you ever wanted to act all super-spy that’ll make you feel oh so cool? Ever wondered how it felt like to be part of the CSI team? Well I have awesome news for you! The activity for today bring stalking to a whole new level. By the time you finished reading my blog, you’ll be able to have a faint idea of the area of your crush/nemesis/best friend/boss’ house that you can put into good use (such as BLACKMAIL!!! hahaha).

Of course I’m kidding 🙂

With an image of the object you wish to determine its area, proper post-processing is required. What do I mean by that? Well, we hope to have a clean image wherein the object is defined and as much as possible the only one existing in your whole image. The ability to separate the background from the object is also important so the use of the im2bw() function was used before the calculation of the area was done.

There were two methods that was discussed in determining the area of a properly post-processed image. One is through the use of simple pixel counting. Since area is defined as the space occupied by your two-dimensional object, simply counting the number of pixels of your object can already be the rough estimate of its area. This is implemented in Scilab through the simple code as seen below:

But then since I’m an Applied Physics student, challenge is my middle name. The other method is more mathematical and is by far complicated. Fret not though as it only LOOKS complicated:

The equation seen above is the base equation for the Green’s Theorem. It simply indicates that the area of a certain contour (left side of the equation) is equal to the integral of the points on the border of your contour upon further simplication. Converting our integral into its discrete form (the integral is simply just the summation!), we have a friendlier equation to make use of.

What’s essential for this theorem to work is the knowledge of the set of points lying at the boundaries of your contour moving at a certain direction (counterclockwise or clockwise).

Now this is where all hell breaks lose.

Without the SIP toolbox installed in your Scilab, you’d have to be creative and smart enough to figure out a way on how to, not only find the edge of your object, get the list of points on the edges of your objects that is sorted in away that it is tracing the object around a specific direction. (again, I encourage you to read my journey before this whole activity by clicking here).

Thanks to the follow() function available with the SIP toolbox, it immediately outputs the list of your sorted coordinates of the edge of your object. But then since we also need the variables Yi+1 and Xi+1.. we have to figure out a way to shift the list that we have from the follow function by moving a single step. I managed to do this by taking advantage of slicing off portions of the list. First I had to place the first element of my original list into a new list ([x2]). The next list I made was the rest of the elements left which is done by the function:

[x1]=x(2:length(x))

How does this work? The 2 signifies the point (or the element) the start while the length(x) signifies the end (for our case the last element) by which you want to slice off your list. Since we want to shift our list a unit forward, we simply combine x1 and x2.

The rest that we have to do in order to get the area is simply implementing the discrete equation of Green’s theorem. Check out my finished code below:

Now off to the fun part – finding the area of different shapes!

Through the use of my code from Activity 2 in creating square apertures, I’ve use three different sized squares and determined the white spaces in between through the pixel count as well as the Green’s theorem. Below is the tabulated values of the areas calculated as well as the actual area taken through geometry:

What really surprised me from the results was not the zero percent error from the Green’s function but rather the high percent error of the pixel counting method! I mean, the idea of simply counting the pixels inside your shape was basically solving for the area as well… I tried looking to determine where I want wrong but sadly I did not find any lead. Since my squares were generated from Scilab, there’s not blurred edges to be worried about.

As for the Green’s theorem garnering zero percent error, this could have been brought by the straightforwardness of the shape we’ve dealt with. If we have curved shapes instead… like for example circles:

Should we expect greater percent errors for the Green’s theorem?

Surprisingly, the case of the circle shifts it the other way around. The area from the pixel count has smaller percent error (less than 1 for all cases) while the area from the Green’s theorem had greater than one percent errors. The possible reason as to why percent errors exist for the Green’s theorem is because of the generation of the circles. I have used my algorithm from Activity 2 in producing circular apertures and I managed to notice that the smaller the size of my circle is, the less it resembles a perfect circle. Remember that pixels after all made up of small-sized squares.

As a last test to see if my code is functional enough to be used for some real stalking business, I tried to find the area of a triangle:

The area from the Green’s theorem gave a value of 13321 pixel squared. Taking note that the triangle (which I produced from Photoshop and saved it as a .BMP so as to avoid blurred edges) has a base width of 177 pixels and a height of 153. Using the known equation of a triangle:

Area = 0.5*(base*height)

The actual area is 13540.5 giving us a percent error of 1.62%!

With a more or less low percent error for my algorithm (which could’ve come up from the fact that the shapes are not actually made of straight smooth lines but rather small squares of pixels), we are now ready for the big thing 😀

—

The Beijing National Stadium taken from http://www.bestourism.com/items/di/1077?title=The-Beijing-National-Stadium

With China’s recent bird’s nest stadium makings its way to the headlines of almost every newspaper back then… I’ve chosen the simple yet exquisite mega-structure as my point of interest. Using Google’s one of many oh-so-useful applications they’ve made available for their fans: Google Maps, I’ve located Beijing’s National Stadium and zoomed it in enough to fit my browser.

Taking note that the output of the program is in fact in terms of pixels, we have to make use of the things we learned from Activity 1 in converting our units: pixels to meters. It is then essential to make use of the scales in properly converting our units. Thankfully, Google has thought of everything as they have made a scale available in google maps as seen inside the pink border of the image above.

What we need to do is measure the number of pixels for the indicated scale above:

108 pixels is to 100 m

Making use of dimensional analysis, we simply have to multiply our output area to 100m/108pixels for two times so that we’d end up with meter squared instead. Hence , the conversion factor to be multiplied is 0.926.

I’ve post-processed the image above through Photoshop by setting Google maps in map mode (the image above actually came from Google maps in Satellite mode). Converting it into grayscale then into a bitmap mode image and finally deleting the unnecessary pixels… I was left with the image below:

Unbelieveable right? The stadium looks like a perfect shape! Now that’s what a call perfect engineering.

Calling the image in my program, I’ve determined the area to be (Green’s theorem) 87189 meter squared.

For comparison, I tried my best to find the actual area of the bird’s nest. However, one has to be careful in simply finding a value for an area as it does not necessarily mean you have measured the exact same thing. At first I was disappointed when I got the area to be around 258000 square meters! I mean, if that was truly the case… my program is after all, very very wrong! However, this 258 000 meter squared value apparently already included the garden as well as the other land nearby the stadium. The one that I measured was actually ONLY the building itself!

Managing to find the estimate of the building to have an area of only 80,000 square meters, I’ve garnered a percent error of 7.64%! UNBELIEVABLE!

Furthermore, my original plan was to find the area taken up by a certain gigantic lazy pink bunny lying around the mountains of Italy. Don’t believe me? See for yourself by the images below:

Despite the awesomeness of the pink knitted bunny lazing around, I was unable to find a source indicating the total area it has covered. Nevertheless, I put my program into use and determined its area to be 8480 meters. Now that’s ONE BIG BUNNY!

—

To finish this blog post, I’d like to give myself a grade of 10/10. Although deep inside I’d like to give myself a higher grade (after going through the trouble of having to install Ubuntu and Scilab and Imagemagick and Animal and SIP and VMware and trying my best to make my own code in order to replace the follow code but failed terribly)… I wasn’t able to explore the topic that much as I have hoped to. So in the end, I was unable to give out something new to the topic…. Oh well… atleast I finally can brag to my friends that I know how Ubuntu/Lunix systems work now 😀 That’s a good enough reward I guess.

Activity 4 (Prequel): Lunix Lunacy!

July 4, 2012

I’m blogging right now as I wait for the binary source code of Scilab to finish downloading (initially it said 1 day then it toned down to 12 hours and now it’s only 2 hours…) . I’ve been facing the computer for more than 12 hours now and I’m still forcing myself to not back out as I’m slowly, slowly, REALLY SLOWLY progressing… at some point. How’d I end up in this kind of mess? Well… let’s just say that my pride got in the way!

For our latest activity, it required the use of a special function called follow() which sadly was only available with the SIP toolbox. This module was not updated in a way that it fit the Scilab version I have currently installed. Since the follow() function basically just traces the outline of a shape and it follows a certain path (clockwise or counter-clockwise)… this should technically be easy to implement on my own.

Now that was where I went wrong.